Dagstuhl Seminar 23452

Human-AI Interaction for Work

( Nov 05 – Nov 10, 2023 )

Permalink

Organizers

- Susanne Boll (Universität Oldenburg, DE)

- Andrew L. Kun (University of New Hampshire - Durham, US)

- Bastian Pfleging (TU Bergakademie Freiberg, DE)

- Orit Shaer (Wellesley College, US)

Contact

- Andreas Dolzmann (for scientific matters)

- Simone Schilke (for administrative matters)

Schedule

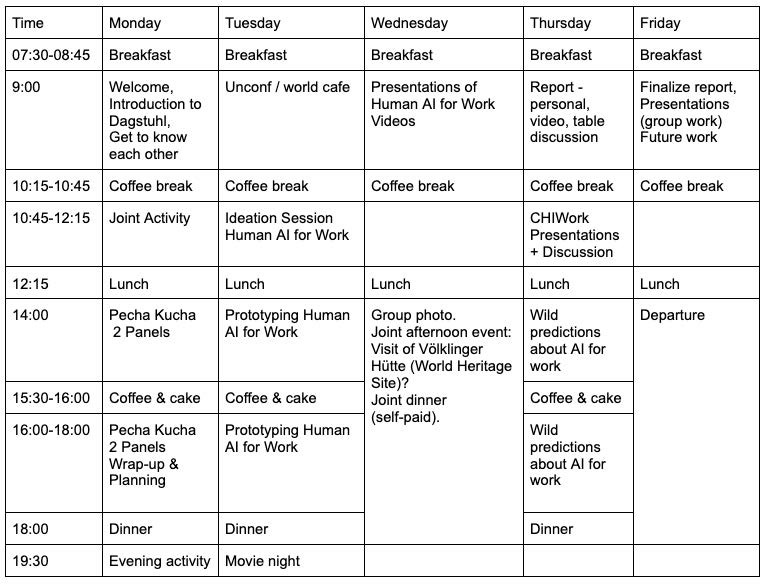

Broadly, seminar participants collaborated in asking: “What are the knowledge gaps that we collectively face regarding the design, implementation techniques, and evaluation methods and instruments for novel models of human-AI collaboration for work?” We approached this broad question by focusing on the following interrelated specific research questions. Figure 1 provides an overview of the initially scheduled seminar activities. The subsequent sections outline the different activities and the results obtained in these sessions.

- Q1 How do we allocate tasks between humans and automation? Automated systems have been around for decades, however today, computer-based automated apps and devices are woven into our professional lives to a greater extent than before. Our dependency on automated systems such as conversational agents, expert systems, vehicles, and drones in daily tasks will likely increase shortly. This will require new forms of humanautomation interaction, allowing us to make decisions and collaborate with automation to achieve some goals. A key question in designing this interaction is how to divide tasks between the human and AI. In many practical settings task division is a difficult problem [1, 2] – we explored how we can create guidelines for task division in various work-related contexts.

- RQ2 How can interfaces allow for the appropriate level of human trust in machines, and reduce incorrect use and confusion? Whenever automation is involved, we need to design user interfaces that support what Lee and See call calibrated trust [3] – a level of trust that is appropriate for the capabilities of the automated system. If the level of trust is not calibrated, human-AI interaction can suffer in two ways. In the case that the human user has too much trust in the AI, they will tend to accept AI suggestions and decisions without a sufficient level of critical reflection, and in some cases, this will lead to accepting bad AI suggestions or decisions. If, on the other hand, the human has too little trust in the AI, they will ignore valuable input from the AI. We explored human-AI interaction designs that allow users to appropriately calibrate their level trust in the AI.

- RQ3 How do we support user attention? The broad question of attention is relevant in many work contexts – in mobile environments like an automated vehicle where the user might have to drive some of the time [4, 5], and at the home office, where multiple distractions could compete for the user’s attention [6].

- RQ4 How do we create and leverage new human-automation interaction technology, and support both work and worker wellbeing? How can technologies such as speech interaction, augmented and virtual reality, and tangible interfaces support human-automation interaction? How can we assure that the technologies are used ethically? Furthermore, as Yuval Noah Harari points out in his book “21 lessons for the 21st century” [7], AI might soon become better than we are at many tasks. How can human users best use, collaborate with, and benefit from such super-smart AI?

References

- Teodorovicz, T., Sadun, R., Kun, A. L., and Shaer, O. (2021). Working from home during COVID19: Evidence from time-use studies. Harvard Business School.

- Teodorovicz, T., Kun, A. L., Sadun, R., and Shaer, O. (2022). Multitasking while driving: A time use study of commuting knowledge workers to assess current and future uses. International Journal of Human-Computer Studies, 162, 102789.

- Michal Rinott, Shachar Geiger, Neil Nenner, Ori Topaz, Ayelet Karmon, and Kiersten Blake. 2021. Designing an embodied conversational agent for a learning space. In Proceedings of the 2021 ACM Designing Interactive Systems Conference (DIS ’21). Association for Computing Machinery, New York, NY, USA, 1324–1335. https://doi.org/10.1145/3461778.3462108

- Uwe Gruenefeld, Lars Prädel, Jannike Illing, Tim Stratmann, Sandra Drolshagen, and Max Pfingsthorn. 2020. Mind the ARm: realtime visualization of robot motion intent in head-mounted augmented reality. In Proceedings of Mensch und Computer 2020 (MuC ’20). Association for Computing Machinery, New York, NY, USA, 259–266. https://doi.org/10.1145/3404983.3405509

- Drolshagen S, Pfingsthorn M, Hein A. Context-Aware Robotic Assistive System: Robotic Pointing Gesture-Based Assistance for People with Disabilities in Sheltered Workshops. Robotics. 2023; 12(5):132. https://doi.org/10.3390/robotics12050132

- Shruti Mahajan, Khulood Alkhudaidi, Rachel Boll, Jeanne Reis, and Erin Solovey. 2022. Role of Technology in Increasing Representation of Deaf Individuals in Future STEM Workplaces. In Proceedings of the 1st Annual Meeting of the Symposium on Human- Computer Interaction for Work (CHIWORK ’22). Association for Computing Machinery, New York, NY, USA, Article 16, 1–6. https://doi.org/10.1145/3533406.3533421

- Puiutta, E., Abdenebaoui, L., and Boll, S. (2023). The Importance of Trust and Acceptance in User-Centred XAI – Practical Implications for a Manufacturing Scenario. CHI 2023 Workshop on Trust and Reliance in AI-Human Teams (TRAIT).

Susanne Boll, Andrew L. Kun, Bastian Pfleging, and Orit Shaer

Susanne Boll, Andrew L. Kun, Bastian Pfleging, and Orit Shaer

Work is changing. Who works, where and when they work, which tools they use, how they collaborate with others, how they are trained, and how work interacts with wellbeing – all these aspects of work are currently undergoing rapid shifts. A key source of changes in work is the advent of computational tools which utilize artificial intelligence (AI) technologies. AI will increasingly support workers in traditional and non-traditional environments as they perform manual-visual tasks as well as tasks that predominantly require cognitive skills.

Given this emerging landscape for work, the theme of this Dagstuhl Seminar is human-AI interaction for work in both traditional and non-traditional workplaces, and for heterogeneous and diverse teams of remote and on-site workers. We will focus on the following research questions:

- How do we allocate tasks between humans and automation in practical settings?

- How can interfaces allow for the appropriate level of human understanding of the roles of human and machine, for the appropriate trust in machines, and how can they reduce incorrect use and confusion?

- How do we support user attention for different tasks, teams, and work environments?

- How can human-automation interaction technology support both work and worker wellbeing?

At the seminar we will discuss these questions considering their interconnected nature. To promote this approach, we invite computer scientists/engineers, electrical engineers, human factors engineers, interaction designers, UI/UX designers, and psychologists from industry and academia to join this Dagstuhl Seminar.

We expect the following key results from the Dagstuhl Seminar:

- Outline of best practices and pitfalls. Which current practices for creating human-AI interfaces lead to positive outcomes for workers? And what are the known pitfalls that designers should avoid?

- List of challenges and hypotheses. Perhaps the most significant contribution of the seminar will be a list of important challenges, or research problems, and accompanying hypotheses. We expect that in the coming 3 to 10 years these problems and hypotheses will serve as inspiration for the research of the seminar attendees, and more broadly the communities involved in designing human-AI interfaces that support work.

- Roadmap(s) for research. The seminar report will include a roadmap for addressing the challenges and hypotheses – the roadmap will outline proposed research collaborations, recommended funding mechanisms, and it will lay out plans for disseminating results such that members of our community are well-informed, and such that they can effectively interact with researchers and practitioners in related communities, including human-computer interaction, human-factors, user experience, automotive engineering, psychology, and economics.

Susanne Boll, Andrew Kun, Bastian Pfleging, and Orit Shaer

Susanne Boll, Andrew Kun, Bastian Pfleging, and Orit Shaer

- Larbi Abdenebaoui (OFFIS - Oldenburg, DE) [dblp]

- Susanne Boll (Universität Oldenburg, DE) [dblp]

- Duncan Brumby (University College London, GB) [dblp]

- Marta Cecchinato (University of Northumbria - Newcastle upon Tyne, GB) [dblp]

- Marios Constantinides (Nokia Bell Labs - Cambridge, GB) [dblp]

- Anna Cox (University College London, GB) [dblp]

- Mohit Jain (Microsoft Research India - Bangalore, IN) [dblp]

- Christian P. Janssen (Utrecht University, NL) [dblp]

- Naveena Karusala (Harvard University - Allston, US) [dblp]

- Neha Kumar (Georgia Institute of Technology - Atlanta, US) [dblp]

- Andrew L. Kun (University of New Hampshire - Durham, US) [dblp]

- Sven Mayer (LMU München, DE) [dblp]

- Phillippe Palanque (Paul Sabatier University - Toulouse, FR) [dblp]

- Bastian Pfleging (TU Bergakademie Freiberg, DE) [dblp]

- Aaron Quigley (CSIRO - Eveleigh, AU) [dblp]

- Michal Rinott (SHENKAR - Engineering. Design. Art - Ramat-Gan, IL) [dblp]

- Shadan Sadeghian (Universität Siegen, DE) [dblp]

- Stefan Schneegass (Universität Duisburg-Essen, DE) [dblp]

- Orit Shaer (Wellesley College, US) [dblp]

- Erin T. Solovey (Worcester Polytechnic Institute, US) [dblp]

- Tim C. Stratmann (OFFIS - Oldenburg, DE) [dblp]

- Dakuo Wang (Northeastern University - Boston, US) [dblp]

- Max L. Wilson (University of Nottingham, GB) [dblp]

- Naomi Yamashita (NTT - Kyoto, JP) [dblp]

Classification

- Human-Computer Interaction

Keywords

- human-AI interaction

- future of work

Creative Commons BY 4.0

Creative Commons BY 4.0